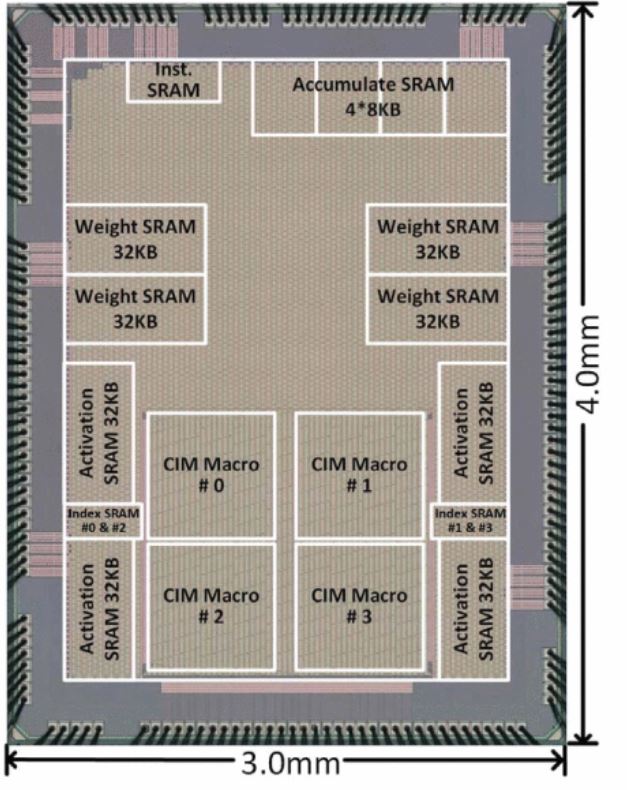

15.2 A 2.75-to-75.9TOPS/W Computing-in-Memory NN Processor Supporting Set-Associate Block-Wise Zero Skipping and Ping-Pong CIM with Simultaneous Computation and Weight Updating

Abstract

Computing-in-memory (CIM) is an attractive approach for energy-efficient neural network (NN) processors, especially for low-power edge devices. Previous CIM chips [1] –[5] have demonstrated macro and system-level design enabling multi-bit operations and sparsity support. However, several challenges exist, as shown in Fig. 15.2.1. First, though a previously proposed block-wise sparsity strategy [5] can power off ADCs, zeros still contributed to storage requirements, and power gating was not applied to computing resources. Second, on-chip SRAM CIM macros are not large enough to hold all weights. Updating weights between computing operations leads to significant performance loss. Finally, the limited sensing margin incurs poor accuracy for large NN models on practical datasets, such as ImageNet. The precision and power of the ADCs should be optimized and adjusted.